Unit-Testing Machine-Learning Pipelines in Python: A Comprehensive Guide

As machine learning (ML) continues to evolve, the importance of robust testing practices has never been more critical. Unit testing, a practice borrowed from traditional software development, plays a pivotal role in ensuring the reliability and accuracy of machine learning pipelines. This guide delves into the intricacies of unit testing within the context of Python-based ML pipelines, providing a comprehensive overview of methodologies, tools, and best practices.

Understanding Machine Learning Pipelines

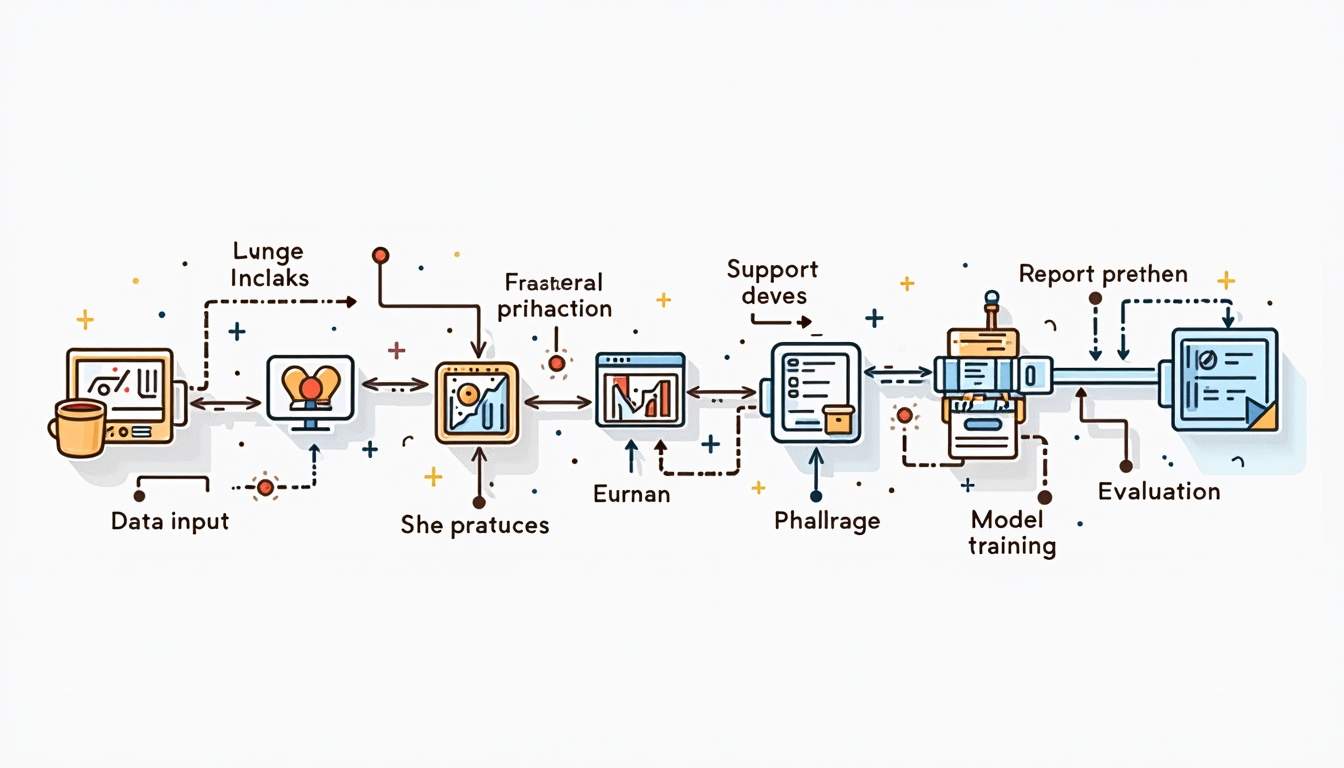

Before diving into unit testing, it's essential to comprehend what a machine learning pipeline entails. At its core, a machine learning pipeline is a series of data processing steps that transform raw data into a model ready for deployment. These steps typically include data ingestion, preprocessing, feature engineering, model training, and evaluation.

Each stage of the pipeline can be complex and may involve various components, such as data transformers, algorithms, and evaluation metrics. Given this complexity, ensuring that each part functions correctly is crucial for the overall success of the ML project. Moreover, the intricacies of these stages can vary significantly depending on the specific use case, the nature of the data, and the intended application of the model. For instance, a pipeline designed for image recognition will differ greatly from one intended for natural language processing, necessitating tailored approaches to data handling and model selection.

Components of a Machine Learning Pipeline

A typical machine learning pipeline consists of several key components:

- Data Ingestion: The process of collecting and loading data from various sources.

- Data Preprocessing: Cleaning and transforming raw data to make it suitable for analysis.

- Feature Engineering: Creating new features or modifying existing ones to improve model performance.

- Model Training: The process of training a machine learning model on the prepared dataset.

- Model Evaluation: Assessing the model's performance using various metrics.

The Importance of Testing in ML Pipelines

Testing is vital in any software development process, but it takes on unique challenges in machine learning. Unlike traditional software, where inputs and outputs are often deterministic, ML models can produce varying results based on the data they are trained on. This variability necessitates a robust testing framework to ensure that changes in the pipeline do not lead to unintended consequences.

Unit testing helps identify issues at an early stage, allowing developers to catch bugs before they propagate through the pipeline. This proactive approach not only saves time but also enhances the overall quality of the machine learning product. Furthermore, implementing automated tests can provide continuous feedback during the development cycle, enabling teams to maintain high standards of code quality and model reliability. As machine learning models are often iterated upon and improved over time, having a solid testing strategy in place ensures that new features or modifications do not compromise existing functionality.

Setting Up Your Testing Environment

To effectively unit test machine learning pipelines in Python, a proper testing environment is essential. This involves selecting the right tools and frameworks that facilitate testing and ensure compatibility with the ML libraries in use.

Choosing the Right Testing Framework

Python offers several testing frameworks, with unittest and pytest being the most popular. Both frameworks provide robust features for writing and executing tests, but they have different strengths.

unittest is part of the Python standard library, making it readily available without additional installations. It follows a class-based structure, which some developers may find cumbersome for larger test suites. On the other hand, pytest is known for its simplicity and flexibility, allowing for more readable test cases through its use of function-based tests and powerful fixtures.

Setting Up Your Project Structure

Organizing your project structure is crucial for maintaining clarity and ease of testing. A common approach is to separate the main application code from the test code. This can be achieved by creating a directory structure like the following:

/my_ml_project /src /pipeline /models /tests /pipeline /models

In this structure, the src directory contains the main codebase, while the tests directory houses all testing scripts. This separation helps in managing dependencies and ensuring that tests can be run independently of the main application.

Writing Unit Tests for Machine Learning Pipelines

With the environment set up, the next step is to write unit tests for the various components of the machine learning pipeline. Each component should be tested in isolation to ensure that it behaves as expected.

Testing Data Ingestion

Data ingestion is the first step in the pipeline, and it is crucial to ensure that it correctly loads data from the intended sources. Unit tests for this component should verify that the data is loaded without errors and that it meets the expected format.

import unittestfrom pipeline.data_ingestion import load_dataclass TestDataIngestion(unittest.TestCase): def test_load_data(self): data = load_data('data/source.csv') self.assertIsNotNone(data) self.assertEqual(data.shape[0], expected_row_count) self.assertIn('expected_column', data.columns)In this example, the test checks that the data is not only loaded but also contains the expected number of rows and specific columns. Such tests help ensure that any changes to the data source do not break the pipeline.

Testing Data Preprocessing

Data preprocessing can involve several transformations, such as normalization, encoding categorical variables, and handling missing values. Each transformation should be unit tested to confirm that it produces the correct output.

class TestDataPreprocessing(unittest.TestCase): def test_normalization(self): raw_data = pd.DataFrame({'feature': [1, 2, 3, 4, 5]}) processed_data = normalize(raw_data) self.assertTrue((processed_data['feature'] >= 0).all()) self.assertAlmostEqual(processed_data['feature'].mean(), 0.5, places=2)This test checks that the normalization function outputs values within the expected range and that the mean of the processed data is as anticipated. Such checks help catch errors in data transformations early in the development process.

Testing Model Training

Model training is a critical step that involves fitting a machine learning algorithm to the prepared dataset. Unit tests for this component should verify that the model can be trained without errors and that it produces a valid output.

class TestModelTraining(unittest.TestCase): def test_train_model(self): model = SomeModel() trained_model = train_model(model, training_data) self.assertIsNotNone(trained_model) self.assertTrue(hasattr(trained_model, 'predict'))

In this test, the code checks that the model is trained successfully and that the resulting object has the necessary attributes for making predictions. This ensures that the training process is functioning correctly.

Best Practices for Unit Testing in ML Pipelines

While writing unit tests is essential, adhering to best practices can significantly enhance the effectiveness of the testing process. Here are some key recommendations:

Keep Tests Isolated

Each unit test should be independent of others. This isolation ensures that failures in one test do not affect the execution of others, making it easier to identify and fix issues. Using mock objects can help simulate dependencies without relying on actual implementations.

Test Edge Cases

When writing tests, it is crucial to consider edge cases and potential failure scenarios. This includes testing with empty datasets, unexpected data types, and extreme values. Such tests help ensure that the pipeline can handle a variety of inputs gracefully.

Automate Testing

Automating the testing process is vital for maintaining a robust machine learning pipeline. Continuous integration (CI) tools can be used to run tests automatically whenever changes are made to the codebase. This practice helps catch issues early and maintains the integrity of the pipeline.

Integrating Unit Tests with CI/CD Pipelines

Integrating unit tests into a continuous integration and continuous deployment (CI/CD) pipeline is a powerful way to ensure that machine learning models are always in a deployable state. By automating the testing process, teams can focus on developing and improving models without worrying about breaking changes.

Setting Up CI/CD Tools

Several CI/CD tools, such as Jenkins, Travis CI, and GitHub Actions, can be configured to run tests automatically. These tools can be set up to trigger tests on specific events, such as code commits or pull requests, ensuring that any changes are validated before merging into the main branch.

Monitoring Test Results

Monitoring the results of automated tests is crucial for maintaining the quality of the machine learning pipeline. CI/CD tools typically provide dashboards that display test results, allowing teams to quickly identify and address any failing tests. Setting up alerts for failed tests can further enhance responsiveness to issues.

Conclusion

Unit testing machine learning pipelines in Python is a vital practice that helps ensure the reliability and accuracy of models. By understanding the components of a pipeline, setting up a robust testing environment, and adhering to best practices, developers can create a testing framework that enhances the quality of their machine learning projects.

As the field of machine learning continues to grow, the importance of rigorous testing will only increase. By integrating unit tests into the development process, teams can build more robust, reliable, and maintainable machine learning systems that stand the test of time.

Take Your Machine Learning Pipelines to the Next Level with Engine Labs

Ready to supercharge your machine learning development process? Engine Labs is here to transform your workflow. With our AI-driven software engineer, you can integrate effortlessly with tools like Jira, Trello, and Linear, turning tickets into pull requests with unparalleled efficiency. Automate up to 50% of your workload and keep your team focused on innovation and quality. Don't let backlogs slow you down. Get Started with Engine Labs and lead the way in building robust, reliable machine learning systems faster than ever.