OpenAI Codex promises an agentic coding workflow that sits between traditional IDE‑centric development and fully autonomous code generation. I spent several hours playing with the current public version, taking notes while performing a small front‑end refactor. If you are evaluating Codex today this might be a useful overview.

TL;DR Codex is functional, clean, and narrowly focused.It covers the basics well, but functionality is limited. This will feel especially acute for teams that rely on tools outside GitHub or that need greater control over configuration. A few rough edges remain.

First impressions

Auth hiccups

First contact was a little rough.The GitHub OAuth handshake failed twice before finally completing. BecauseCodex currently supports only GitHub, a broken auth flow blocks everything else. Once connected the application loaded, but the initial hiccup is curious for a business as well-resourced as OpenAI.

Minimalist to a fault

The UI is sparse. A single column holds the main interaction window; a sidebar lists repositories already linked. There are no distractions, which keeps focus on the task at hand. It looks really good, but like a very expensive kitchen that never gets used.

The trade‑off is that functionality is constrained, and some information is hidden away. This could feel limiting to would-be power-users.

Documentation

The interface feels quite intuitive which is good, because Inline help is thin. There isn’t much aside from a handful of tooltips and descriptors explaining basic functionality which is enough to get you started. However, guidance on some important things exists in documentation that is brief and dense at the same time.

Skip the suggested tasks

Upon first run Codex offers afew suggested tasks which I found to be a waste of time. They were queued up when all I wanted to do was take Codex for a spin on a real task. I expect they are meant to guide your first encounter with Codex, but the basics are intuitive enough without them.

Integrations

Codex integrates only withGitHub today. Gitlab, Linear, Jira, Slack, and other popular tools are notably absent. If you use Gitlab, you’re out of luck, since Github is required to plug into any codebase. Other omissions are easier to bypass – you can easily enough paste Linear issues into the task text box.

If you use Gitlab, or workflow integrations are a must have, try Engine, a model agnostic alternative to Codex.

Configuration and Flexibility

Codex exposes only two meaningful workflow settings: default branch naming and a free‑form custom instruction field, behaving like a system prompt for the model. Everything elseis fixed. This is beautifully simple but limits where Codex can be useful.

Environment Setup

Environments are configured on aper repo basis, which makes sense. Here things are similarly straightforward, if lacking in thought in a couple of places.

Environment variables must be added individually with separate fields for key and value. On a repo with many secrets this is tedious.

The manual container setup page lets you choose preinstalled packages. That part is welcome: selecting PostgreSQL or Redis images is a click. After the container boots it is sealed from the public internet which limits some use cases.

A bigger concern is feedback.The UI never states “Your container is configured correctly”. You infer success only when the agent later runs lint and test steps without failing.

If greater workflow flexibility or more powerful environment configuration is important to you, Engine could be a better fit. Engine’s setup agent can take care of complex codebase setup automatically.

Agents.md Convention

Codex recommends storing common context in an agents.md markdown file that tells agents how to understand and work with your repository. This is a neat convention to encourage and works similarly to a .cursourrules or claude.md file. Here is where you define how Codex should check its work – what tests, formatting or linting to run, for example. However, it doesn’t not let you dictate explicit lint or test commands. The agent decides which scripts to execute. Teams with bespoke CI workflows may find that too implicit.

For clearer CI workflow definition, Engine allows youto import Github and Gitlab workflows as well as run any explicit command before making a PR.

Main interaction window

The heart of the product is a text area that toggles between Ask and Code modes. Ask sends a conversational query; Code starts a task that should result in a pull request. This split keeps intent clear and is neat to interact with. I appreciated being able to ask clarifying questions without creating noise in the repo.

You can also add comments or request changes directly from inside the Codex app once a PR appears. That feels natural for people who live in browser tools all day. Engineers anchored in local editors may ignore it.

Trial task: small frontend refactor

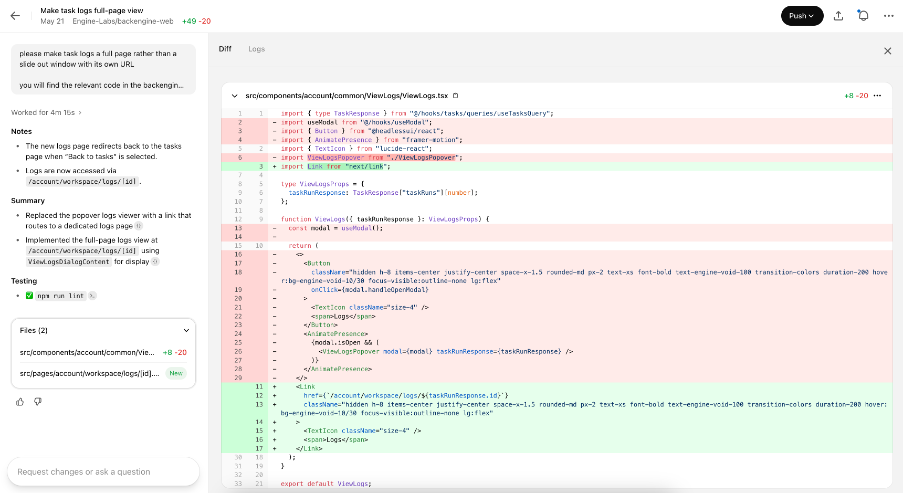

I asked Codex to complete a simple task - to change a popout modal in Engine to a separate page with a direct URL. The repository was a fairly complex TypeScript Next.js application.

Execution time - Codex produced a PR in a few minutes with its work streaming past in a terminal window and conversational logging interface. Since this was asynchronous, longer execution time isn’t a big deal.

Code quality - The Codex app clearly displays the diff but without a running dev server the diff alone gave limited confidence. In any case, the edits looked coherent and touched two relevant files.

Once I made a PR the change was deployed to a preview environment and I was able to click around. Impressively, the change functioned basically as expected but with a slightly different design to what I imagined. That’s fair enough given the extremely brief task description but it would have been nice to be able to provide an image to more easily communicate my intent.

Pull request metadata

The PR title and description left a little to be desired and were overly brief. However, they clearly defined which tests had been run and their outcome.

Codex committed the branch under my GitHub account. There is no setting to use a different identity. Some teams will consider that a compliance issue.

Agent reactivity

I made a code review on the PR requesting one small tweak to see if Codex would pick it back up and make the change. Codex did not react. It appears unaware of GitHub review events, so changes have to be requested in Codex’s proprietary interface.

Developer ergonomics

Codex sits outside the local editor and can’t be interacted with from workflow tools or Github. Every task lives in a web interface. This is characteristic of the overall direction of travel for software engineering, but lacks sympathy for established workflows. The diff viewer is passable, but without an inline running app or terminal it’s not particularly functional.

Strengths

- Clean UI – minimal friction and looks great.

- Successful first build – my sample task deployed without any manual fixes or additional input.

- Agents.md – agent config file convention is helpful and would benefit the ecosystem if it catches on.

- Preinstalled package selector – reduces complexity in container configuration and it a great way to take complexity out of the proprietary setup interface.

Weaknesses

- GitHub‑only – no Linear, Jira, Slack, etc

- Thin docs – unclear troubleshooting paths.

- Janky in places – individual environment variable entry, for example.

- No internet inside container – less flexible once the tasks has started

- Agent reactivity gaps – ignores PR feedback.

- Limited config – cannot define lint, test, or build commands explicitly.

- Auth instability – initial OAuth failures block onboarding.

Comparison with Engine

Out of interest, I ran the same task through Engine. Engine has a few key differences to Codex. It runs in a VM rather than a container, has internet access, workflow integrations, more configuration options, and runs on top of any frontier LLM for code, among other things.

Model choice – I ran the task on both Gemini 2.5 Pro first, then Claude 3.7. Both models ran the task in a similar time to Codex.

Result – Both PRs has better, more descriptive descriptions that helped with code review. Gemini produced a similar result to Codex in one shot with some rougher design details. Claude required a PR review, which I was able to do directly in Github. The result was far closer than Gemini or Codex to the design I imagined, which points to Claude’s aptitude for frontend repos, despite the need to nudge it in the right direction.

These points highlight that Codex’s single‑model focus provides consistency but limits flexibility. If open model selection matters to your workflow, Codex may feel constraining.

Final verdict

OpenAI Codex covers the essentials with a focused, uncluttered interface. It authenticated to GitHub (eventually), spun up an isolated container without much config, and produced a mergeable pull request in minutes. For straightforward repositories that live entirely in GitHub and that do not need external workflow links, Codex is already serviceable. Teams that demand richer integration, customizable pipelines, or multi‑model experimentation will quickly bump into walls.

Codex feels like a solid MVP around an excellent model rather than a finished platform. The foundation is promising, but gaps around documentation, configuration depth, and reactive intelligence must close before wider adoption. Until then, keep it on the radar, run a proof of concept against a low‑stakes project, and weigh it against IDE based tools or other remote agents.