Google’s Jules AI coding agent is an ambitious step beyond traditional code assistants like autocomplete and copilots. Announced in late 2024 and initially released in stealthy private beta, Google Jules AI, now publicly available, promises to automatically handle coding chores – from fixing bugs to writing tests – while developers focus on more complex tasks. Unlike inline code suggestion tools, Jules operates asynchronously as an autonomous coding agent that works in the background. This means you can assign Jules a development task and continue with other work, then return to review a completed solution. It’s a marked shift in paradigm: not just an AI pair-programmer, but potentially an AI that can take on entire coding tasks semi-independently.

As the race in AI-assisted development intensifies, Google’s launch of Jules comes amid stiff competition. Microsoft’s GitHub Copilot and OpenAI’s Codex have set high standards for AI coding assistance, and both companies are evolving those product lines into more autonomous agents that have also recently launched in public. Google’s Jules enters this arena aiming to leapfrog these tools with deeper integration and planning abilities backed my the powerful Gemini 2.5 Pro. In this review, we’ll dive into Jules’s core capabilities, technical underpinnings, real-world use cases, and how it stacks up against the competition. We’ll also critically examine its limitations and consider just how useful this “AI coder” really is for professional engineers in day-to-day development.

Core Capabilities of Jules AI

At its core, Jules is designed to offload the tedious and repetitive tasks that often bog down developers. It is capable of handling a variety of coding tasks autonomously when pointed at a codebase and given a goal. Key capabilities include:

- Bug Fixes: Jules can automatically apply fixes for known issues. Developers can assign Jules an issue (e.g. from a bug tracker or a failing test), and Jules will formulate code changes to resolve it. It uses the context of your repository to locate the problem and patch it, potentially touching multiple files if necessary.

- Writing Tests: The agent can generate unit tests or other tests to increase coverage and ensure code correctness. For instance, a developer could prompt Jules with “Add a test suite for the

parseQueryStringfunction” and Jules will write relevant test cases. Early users have reported that Jules can effectively analyze a project and create comprehensive tests – even aiming for 100% coverage – something that impressed many who compared it to OpenAI’s earlier Codex model. - Implementing Features: Jules isn’t limited to bugfixes. It can also build new features by writing fresh code. Given a high-level description of a feature or enhancement, it will generate the necessary code changes to implement it. This might involve creating new modules, modifying configs, and more, all coordinated as part of a plan.

- Code Refactoring and Updates: Another strength is handling maintenance work like refactoring code or updating dependencies. Jules can bump library versions and update configurations (for example, upgrading your project to use a new version of Node.js or a new framework release). It will adjust relevant files (like

package.json, build scripts, etc.), attempt to run the build or tests to verify nothing breaks, and summarize the changes. - Documentation and Changelogs: Jules even helps with documentation. It can add or update code comments and docs for clarity where needed. Uniquely, it offers audio changelogs – essentially spoken summaries of recent changes. In practice, this means Jules can generate a contextual summary of what changed in your project, which you could listen to for a quick update. This novel feature caters to developers who might appreciate an auditory recap of code updates.

These capabilities are enabled by Jules’s deep code understanding. It doesn’t operate on single-file prompts alone; it ingests your entire repository context so it can reason about the codebase holistically. This allows it to make multi-file changes intelligently, for example updating function calls across a project or ensuring a new feature is initialized in all the right places. Google internally reported that Jules “creates comprehensive, multi-step plans to address issues, efficiently modifies multiple files, and even prepares pull requests” to merge the fixes back into the repo. In essence, Jules acts almost like a junior developer who can understand your codebase and perform scoped assignments – except it works at superhuman speed and scale.

Under the Hood: Technical Foundations

The impressive capabilities of Google Jules AI stem from its technical underpinnings, which combine a powerful large language model with an execution environment and tight VCS integration. Jules is built on Google’s Gemini AI model, leveraging the latest version known as Gemini 2.5 Pro. Gemini 2.5 Pro is Google’s state-of-the-art multimodal language model, succeeding earlier versions like Gemini 2.0. It brings advanced code understanding and reasoning abilities that surpass many predecessors. In fact, Google’s research indicated that an agent using Gemini 2.0, combined with an execution feedback loop, achieved 51.8% success on SWE-bench (Verified) – a benchmark of real-world software engineering tasks. This was accomplished by the model generating hundreds of candidate solutions and verifying them against unit tests, a strategy that Gemini’s speed made feasible. The use of Gemini 2.5 Pro in Jules means developers are tapping into one of the most powerful coding AIs available.

Want to use other models beside Gemini 2.5 Pro like Claude 4? Try Engine and hot swap models from OpenAI, Google, and Anthropic with a state-of-the-art agent integrated into your workflow.

However, a great model alone isn’t enough. Jules couples the AI with an agentic planning system and a secure execution environment. Here’s how it works under the hood:

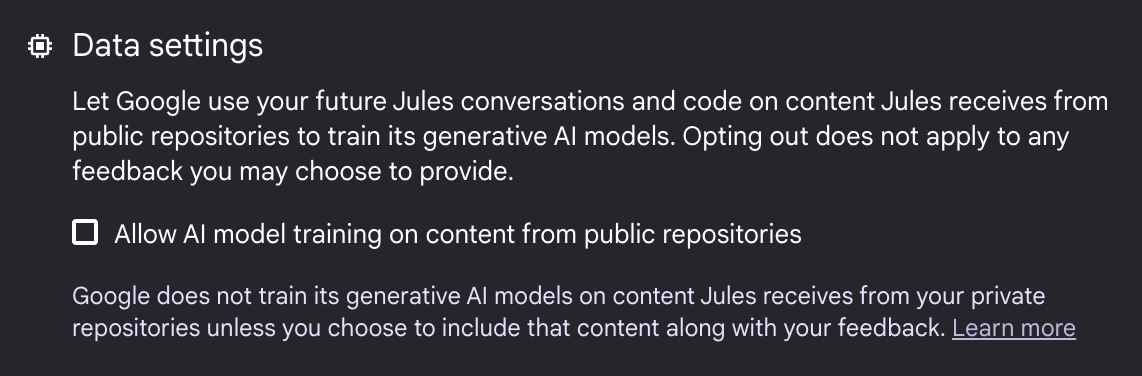

- Asynchronous Cloud VM: When you assign a task, Jules spins up a dedicated virtual machine in Google Cloud and clones your repository into it. This VM is a sandbox where the agent can safely make changes, run build or test commands, and even access the internet for dependencies or documentation as needed. Because it’s a full Linux environment with your code, Jules isn’t limited by a snippet of context – it can truly “read” and modify your entire project. The VM isolation also addresses security: your code and data remain contained, and Jules does not leak or use your private code to train models. However, to avoid training on public repos, be sure to alter the default settings!

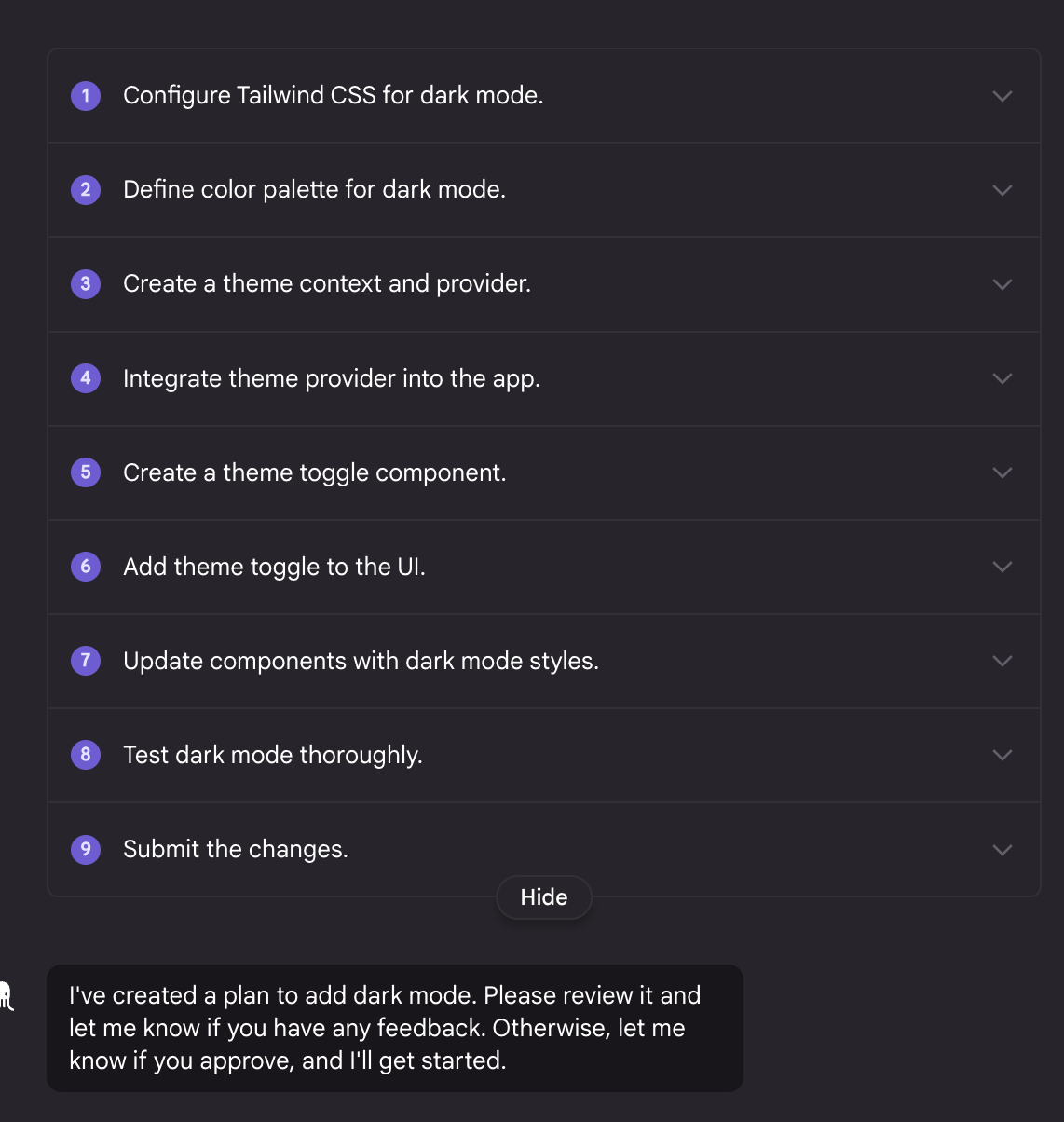

- Multi-step Planning: Jules doesn’t jump straight into writing code. Instead, it generates a plan – a structured sequence of steps it intends to take. For example, if tasked with upgrading the Node.js version in a project, a plan might include steps like “Update version in package.json, run tests to verify, fix any compatibility issues, then commit changes.” This planning stage is crucial for transparency and correctness. Each step is described in natural language, sometimes with sub-tasks or assumptions (e.g. “will use nvm to install Node 18 and update engines field”) so the developer can see Jules’s approach before execution.

- Developer in the Loop: Even though Jules automates much of the grunt work, it keeps the developer firmly in control. When the plan is presented, the developer can review it and must explicitly approve it before code execution begins. At this point, you can also intervene – modify or refine the plan via an interactive chat if something looks off. This “user steerability” is a design priority: “Modify the presented plan before, during, and after execution to maintain control over your code,” as Google emphasizes. In practice, Jules allows back-and-forth in a chat interface at any time. You might correct Jules’s misunderstanding, answer a clarification question it asks, or request an additional change (e.g. “Also update the README for this new feature”).

- Parallel Execution and Speed: Once approved, Jules executes the plan. Thanks to the cloud VM (backed by Google’s fast TPUs/GPUs) and Gemini’s efficiency, Jules can perform tasks in parallel and much faster than a human. It can run build scripts, tests, and multiple edit operations concurrently if the plan allows. Google notes that Jules’s cloud infrastructure enables concurrent task handling – meaning you could even run two tasks at once (e.g., fix two different bugs simultaneously) within the limits of the beta. Currently, beta users can run up to 2 tasks in parallel and up to 5 tasks per day by default, which hints at Jules’s capacity but also the need to moderate usage in this early phase.

- Integration with Git: Jules’s final output is designed to seamlessly integrate into your version control workflow. It not only shows you a diff of all changes it made, but also commits the changes on a new branch when you’re ready. With one click, you can push that branch to GitHub via the Jules interface, and then open a pull request for review and merging. Notably, Jules appears as the commit author (annotating that an AI agent made the commit), but if you open a PR, you (the human) are listed as the PR author. This encourages human oversight and preserves accountability – the developer remains responsible for approving the merge after reviewing Jules’s work. The tight GitHub integration means there’s no context switching; Jules works “directly inside your GitHub workflow” as Google puts it. Developers access it via the web (or potentially an IDE plugin in the future), and once GitHub permissions are granted, Jules can create branches, commit, and propose PRs just like a team member.

Behind all these steps, the heavy lifter is the AI model. Gemini 2.5 Pro not only generates the code but also the reasoning for each step (which you can see in the activity feed as it runs). The activity log shows Jules’s “thoughts” and rationale in real-time – for instance, it might log “Step 3/5: Running unit tests to ensure no regressions” or “Error encountered: missing module, attempting npm install to resolve”. This gives developers insight into what the AI is doing, almost as if a colleague were narrating their work. If something goes wrong, Jules may adjust on its own or ask for guidance. It will automatically retry on certain failures (within limits) – for example, if tests fail, it might attempt a fix and run again, or if a setup script crashes, it flags it. If a task ultimately cannot be completed, Jules marks it as failed and notifies the user, who can then tweak the prompt or fix environment issues and rerun it.

In summary, Jules’s architecture marries a cutting-edge LLM with pragmatic software engineering tooling. By planning first, executing in a sandbox, and involving the developer at key points, it aims to be both powerful and safe. It is notable that Jules operates with respect for privacy: Google has stated clearly that Jules does not train on your private repository data. All code stays isolated and is not fed back into Google’s models, addressing a major concern companies have with AI dev tools.

Asynchronous Workflow and Use Cases

One of the most touted features of Jules is its asynchronous nature. Unlike a live pair-programmer that requires your constant input or an IDE assistant that completes your line of code as you type, Jules enables a “fire-and-forget” style of interaction for certain tasks. This mode opens up new workflows for developers and teams:

Working in the Background: Because Jules handles tasks independently once you kick them off, you can assign a non-urgent coding task to it and literally walk away. For example, suppose you have a list of trivial bugs from a bug bash or a bunch of minor code refactors – you can create tasks for each, hit run, and then move on to designing a new feature or attending a meeting. Jules will work through the task list, and you’ll get a notification when each task is done or if it needs your attention. This is a productivity boon: you effectively have a junior developer working in parallel with you. Google reports that internal teams using Jules enjoyed “more productivity” by offloading busywork and context-switching less.

Use Cases in Real Projects: The real-world uses of Jules tend to cluster around tasks that are well-defined and somewhat self-contained. Some practical examples include:

- Clearing Out a Bug Queue: If you’ve got a backlog of known bugs (with associated issue descriptions or failing tests), Jules can be directed one by one to fix them. You provide the issue description as the prompt, and it will attempt a fix, run tests, and prepare the code changes for review. This could be game-changing right before a release crunch, handling a flurry of small issues overnight.

- Writing Missing Tests or Docs: Maybe your project has areas with insufficient tests or documentation. Jules can be asked to “add documentation comments to all public functions in Module X” or “create tests for module Y to reach 90% coverage.” Because it reads the codebase, it can generate reasonable docs and tests that integrate with your existing ones. It’s like having a dedicated QA/dev writing those much-needed tests while you focus on core logic.

- Upgrading Dependencies: Software maintenance often requires updating library versions or frameworks (for security or performance). Jules shines at this use case. A task might be “Upgrade this app to Angular 16” or “Bump all dependencies to latest minor version and ensure it still builds.” Jules will modify config files, maybe update API usage if needed, and attempt to get the project running on new versions. It can even compile the project to verify things, since it’s operating in a live environment.

- Implementing Template Features: If there’s a standard feature (like adding a new REST API endpoint, or implementing a forgot-password flow) that involves boilerplate across multiple files, Jules can handle the boring parts. You describe the feature and it writes the code, often borrowing patterns from your existing codebase to stay consistent. For instance, “Add a new API route for exporting user data, similar to the import route we have” – Jules can find the import route code and mirror its style to build the export functionality.

- Consulting Documentation / Codebase Q&A: While not a traditional “task,” Jules can answer questions about your codebase (“What does this function do?”, “Where is the user authentication implemented?”) thanks to the LLM’s knowledge and full project context. Google’s Josh Woodward noted Jules can “consult documentation” in the background when working on tasks. This suggests that if Jules needs clarity, it might look at your README, code comments, or even external docs (perhaps via internet access) to ensure it’s doing the right thing.

Progress Updates and Notifications: Because tasks may take some time (anywhere from seconds to many minutes for large tasks), Jules provides real-time progress and notifications. In the web UI, you can watch the live feed as it steps through the plan. However, you’re not required to babysit it; you can close the window and do other work. Enabling notifications will alert you when Jules finishes or if it pauses for feedback. For example, you might get a browser notification: “Jules has completed Task: Update Node.js version – 5 files changed, 2 tests added. Review required.” This asynchronous workflow fits naturally into the way developers work – similar to running long CI jobs or automated refactoring tools and checking results later.

Team Collaboration: Jules could also be used by teams as a sort of automated “developer bot.” For instance, a team lead might queue up a set of Jules tasks at the end of the day (one for each open bug or each new feature stub) and let Jules churn through them overnight. The next morning, developers come in to a set of fresh branches/PRs to review, rather than starting from scratch. It turns development into a higher-level supervisory role for those tasks – verifying and polishing the AI’s contributions, rather than writing everything manually from zero. This can accelerate development cycles if used judiciously.

Wish you could add your team to a Jules workspace? Try Engine which lets you add all your team members to work on all your configured repos.

It’s worth noting that Jules is language-agnostic in principle, meaning it can work with many programming languages. In practice, though, it “works best” with certain ecosystems, specifically JavaScript/TypeScript, Python, Go, Java, and Rust at this stage. This aligns with common project types and what environments Google has likely optimized Jules for (these languages are also likely supported in the default VM with necessary runtimes). If you use a language outside this list, Jules might still function – but you may need to ensure the VM has the right compilers or interpreters and provide a clear setup script. The effectiveness of Jules will also depend on the clarity of your environment setup and tests. Projects with good automated tests and straightforward build steps let Jules verify its work, increasing trust that the changes are solid.

Innovative Features That Set Jules Apart

Beyond its basic capabilities, Jules introduces or popularizes several innovative features that distinguish it from earlier coding assistants:

- Autonomous Multi-Step Planning: As discussed, Jules’s ability to generate a multi-step game plan before writing code is a game-changer. Competing tools historically operated step-by-step or interactively, whereas Jules can outline an entire solution approach in one go. This planning not only provides transparency but often results in more coherent changes (since the steps are considered together). It’s like having an AI that not only writes code, but project manages the change. Early testers noted this as a major advantage: “Jules plans first and creates its own tasks. Codex does not. That's major.”. By seeing the plan, developers can course-correct early or gain confidence that Jules “gets it” before time is spent coding.

- Rich GitHub Integration: Jules is not a standalone sandbox or a separate IDE plugin – it lives where your code lives. The direct integration with GitHub means Jules can be truly woven into your development workflow. For example, imagine opening a pull request and having Jules automatically offer to generate additional tests for it, or an issue in GitHub that has a “Fix with Jules” button. While those specific features might be on the roadmap, the groundwork is laid. No context switching to copy code from your IDE to an AI chat window – Jules already has the repo and can directly commit changes. This deep integration is a step beyond tools that, say, only provide a diff that you must manually apply. Jules essentially acts as an automated Git collaborator.

Looking for a GitLab integration? Engine runs on both GitHub and Gitlab out of the box.

- User Steerability & Interactive Feedback: Many AI code tools are one-shot or limited in interaction (you prompt, they give code, end of story). Jules, by contrast, supports an interactive dialog throughout the task lifecycle. You can chat with Jules to refine its plan or code, which is more natural and flexible. For example, during execution you might see it naming a function poorly; you can type “Use a more descriptive name for that function” and Jules will adjust on the fly. This level of real-time feedback and correction is akin to how you’d guide a human junior developer – a unique selling point for Jules. It means the output can incrementally be steered towards exactly what you want, reducing the time spent cleaning up after the fact.

- Concurrent Task Handling: Jules can juggle multiple tasks at once in separate sandboxes (within beta limits). This parallelism is innovative among AI dev tools. It hints at future possibilities where an AI agent could coordinate across tasks – for instance, update two services in parallel, or fix bugs across different repositories concurrently. While current usage is capped (to ensure system stability), it showcases the potential for scale: one developer supervising multiple AI threads of work simultaneously.

- Audio Summaries (Changelogs): The inclusion of audio changelogs is rather novel. After Jules completes tasks, it can produce an audio summary narrating what changed. While this might seem like a minor convenience, it’s an interesting productivity idea – developers can listen to summaries of code changes during a commute or stand-up meeting. It takes the concept of commit messages or diff review to a different medium. Under the hood, this likely uses Gemini’s multilingual text-to-speech capability (since Gemini 2.x supports generating audio). The audio changelog feature underscores Google’s push for multimodal AI usefulness, and while not critical to coding itself, it’s an innovative way to keep developers in the loop.

- Real-Time Tool Usage: Jules has the ability to invoke tools during its run, such as running shell commands, tests, or even web searches if needed. In fact, Gemini 2.0 introduced native tool use (like calling an API or execution environment), and Jules leverages this by executing code and reading outputs. This “agent loop” of generate code -> run code -> read results -> adjust is cutting-edge and something that sets autonomous coding agents apart from static code generation. Jules can compile and run your program as part of its process, which means it doesn’t just hallucinate solutions – it often verifies them. For example, if tasked to optimize a function, Jules might run a quick benchmark in the VM to confirm the new version is faster. This blurs the line between coding and DevOps automation, potentially letting the AI ensure its work meets acceptance criteria before handing it back to you.

Collectively, these features illustrate why many in the developer community see Jules as more than just another Copilot. Some have even hailed it as a potential “Codex killer”, referring to OpenAI’s earlier coding model. While it’s too early to declare winners, it’s clear Jules is pushing the envelope on autonomy and integration. It is driving forward the concept of “agentic” development – where developers delegate tasks to AI agents and oversee the results, rather than micromanaging every line of code.

Limitations and Challenges

Despite the excitement around Jules, it’s important to maintain a realistic perspective. As an experimental product in beta, Jules has its share of limitations and rough edges. Engineers evaluating it for real-world use should consider the following practical drawbacks:

- Early Stage “AI Intern” Behavior: Jules is still essentially a very smart, very fast junior developer – which means it can make mistakes or produce suboptimal code. Google has been candid that “Jules may make mistakes” and is in early development. You might find that some fixes work but aren’t the cleanest solution, or a feature implementation meets the prompt literally but not the spirit of what you wanted. It’s not infallible. In internal testing, Jules boosted productivity but still required oversight. In critical codebases, you would need to thoroughly review Jules’s output just as you would a human newcomer’s code.

- Quality of Code and Style: Initial user feedback has noted that Jules’s code contributions can sometimes be verbose. For instance, one Reddit user observed that Jules tended to introduce duplicate code and unnecessary boilerplate in some cases, calling it “overcomplicated” in its approach compared to a human coder’s touch (whereas the older Codex might have been too minimal). Ensuring the AI adheres to your project’s style and best practices might require additional prompting or post-hoc editing. The interactive feedback helps mitigate this (you can ask Jules to refactor its own output), but it’s a learning curve to get the right prompts and corrections in place.

- Dependency on Good Prompts and Setup: Jules doesn’t magically know the full intent behind an issue or task without guidance. Crafting a clear prompt is essential to get good results. Vague instructions like “improve performance” might lead to inconclusive changes, whereas specific tasks work better (e.g. “Optimize the sorting algorithm in

utils.pyfor time complexity”). Similarly, Jules’ success often hinges on having a proper environment setup script and tests. If the project lacks tests or uses a complex proprietary build that Jules can’t easily run, it may struggle to validate its changes. Long-lived processes (like a dev server) are not supported in its setup, so it expects discrete build/test commands. Projects that are difficult to set up in a clean VM will require more hand-holding (providing a detailed setup script for Jules). - Scope and Context Limitations: While Jules can handle large projects (thanks to streaming file reads and selective context), there are practical limits. The underlying model has a context window limit, so Jules can’t load every file at once. It needs to be strategic about what it reads (likely it uses repository indexes or focuses on relevant files per task). For extremely large monorepos or highly interdependent systems, Jules might miss a cross-cutting concern or a subtle side effect outside its current scope. It’s best at relatively self-contained tasks. If a task spans too many modules or requires broad architectural knowledge, Jules might need to tackle it in smaller sub-tasks – or it could get confused. The developer may need to break down a big request into smaller prompts.

- Performance and Waiting Time: Running an agent in the cloud to do a task isn’t instantaneous. In many cases Jules will be faster than a human (especially for mechanical tasks), but there is overhead: provisioning the VM, installing dependencies, etc. Simple changes that an experienced dev could do in 5 minutes might still take Jules a few minutes to plan, execute, and report, especially if building/testing the project is involved. If Jules encounters snags and retries, that adds more time. So for quick one-line fixes, it might not save time to invoke Jules versus just doing it yourself. The benefit really shows for more complex tasks or batches of tasks done asynchronously. But developers need to get used to a new rhythm of working – more like running a long test suite or CI job.

- Usage Limits and Resource Constraints: In the current beta, there are hard limits on how much you can use Jules (to prevent abuse and manage costs). As mentioned, it’s capped at 5 tasks per day for most users and 2 concurrently. Heavy users can request higher limits, but clearly it’s not wide open for unlimited coding just yet. Also, because it’s free in beta, Google likely has guardrails on the complexity of tasks (for example, extremely large code generation might be truncated). There might also be occasional queue times if many users are using the service at once. All these are typical for a beta product but mean you can’t (at this time) rely on Jules for everything or for very large-scale automated refactoring without planning around these limits.

Looking for Jules-like functionality but with uncapped usage? Engine is generally available today for individuals and teams of all sizes.

- Security and Privacy Concerns: While Google has stressed privacy (no training on your private code, data isolation), some organizations will still be cautious about sending code to any cloud service. Jules requires connecting to GitHub and effectively uploads your repository code to Google’s servers (into the VM) to work on it. For open-source projects this is fine, but private enterprise code might be subject to policies. Google’s assurances are strong here, but companies will evaluate compliance and risk. Additionally, Jules having the ability to execute code means if there were ever a bug or misuse, it’s theoretically possible for it to run destructive commands. Google likely has safety checks and the requirement for user approval helps, but as with any powerful tool, one must use it prudently and perhaps avoid running it on code or with privileges you’re not comfortable with.

Finally, it must be said that Jules does not replace human developers – nor does it claim to. Its utility lies in automating the boring stuff and speeding up the routine tasks. But the creative, architectural, and highly complex decisions still firmly require human judgment. Jules might get you 80% of the way on a given task, but that last 20% – making sure the change actually fits the product requirements, has no subtle bugs, and is coded in an optimal way – that’s where the developer’s expertise comes in. If used recklessly (e.g., blindly merging Jules’s outputs without review), one could introduce bugs or technical debt. In critical environments like medical or financial software, AI-generated changes would likely need even more stringent review and testing.

In short, Jules is a powerful assistant, but not a silver bullet. It requires a mindful approach: clear instructions, oversight, and willingness to iterate. As the AI improves, some of these limitations will diminish (the model will get smarter, the style more refined, the speeds faster). But today, any team adopting Jules should treat it as a junior developer – one who can work incredibly fast and handle grunt work, but still learning the ropes of quality code.

Jules AI vs. Other Coding Tools

How does Google Jules AI stack up against other AI coding assistants and agents on the market? Given the rapid evolution in this space, it’s helpful to compare their approaches and features:

- Jules vs. GitHub Copilot: GitHub Copilot is the pioneer of AI pair-programming but the product originated as an IDE assistant, working primarily as you type, suggesting code completions and small functions within your IDE. It’s great for boosting individual coding productivity in real-time. However, vanilla Copilot doesn’t autonomously fix bugs or create multi-file changes without user guidance. Microsoft has been augmenting Copilot with more agent-like capabilities. The Copilot agent can take higher-level instructions, run commands, and create PRs, similar to Jules. The key difference is ecosystem integration: Copilot is tightly integrated into editors like VS Code and the GitHub ecosystem, and it benefits from OpenAI’s coding expertise. Jules, on the other hand, integrates with GitHub but runs through Google’s systems and web UI currently. Jules’s strength is in planning and background execution – Copilot (today) is more synchronous in nature and may not yet match Jules’s multi-step autonomous planning in maturity. That said, Microsoft’s vision for Copilot is converging towards what Jules does, so we can expect the gap to narrow.

- Jules vs. OpenAI Codex and ChatGPT: OpenAI’s Codex model originally powered Copilot and was accessible via an API for single-turn prompts (e.g., “write a function that…”). ChatGPT (especially GPT-4) has become a go-to assistant for many developers to ask coding questions or even request code snippets. But using ChatGPT on a whole repository is cumbersome – you have to paste code or summarize context, and it won’t directly integrate with your tools. OpenAI has released a far more agentic Codex as a CLI tool and a 'remote agent'– indeed, just as Google I/O was showcasing Jules, OpenAI released a research preview of an autonomous Codex agent that works in a sandbox, much like Jules. This Codex agent can read a codebase, fix bugs, and answer questions about it in a separate environment. In terms of capabilities, Jules and OpenAI’s codex agent are on a similar track: both can run code, create plans, and make changes. One tester who tried both remarked that “Jules beats Codex by a lot” on a task like writing comprehensive unit tests – attributing that to Jules’s planning and internet-access (for installing packages or looking things up) features. However, these comparisons are anecdotal and both are improving rapidly. A difference to note is model power: Codex's custom model is extremely capable with code, and by some metrics might still hold an edge. Google’s Gemini 2.5 Pro is its answer to that, and claims of superiority are hard to judge without extensive benchmarks. We do know Gemini 2.x was trained with a focus on reasoning and tool use, which bodes well for Jules. The competition here will likely oscillate as each company updates their models.

- Jules vs. Cursor, Replit & Others: Apart from the big players, there are startups and open-source projects offering AI coding tools. Cursor is an IDE that integrates an AI assistant capable of making multi-file edits via chat, somewhat akin to ChatGPT in your editor. It doesn’t have an autonomous “go fix this and open a PR” mode like Jules, but a user can instruct it to refactor or generate code across files. Replit Ghostwriter recently introduced “Ghostwriter AI” features like generating whole functions or even doing small projects within Replit’s cloud IDE – again, more interactive than autonomous. Amazon CodeWhisperer is another Copilot-like tool focused on suggestions and security analysis, but not an agent. Jules currently stands out for automation – it takes initiative once given a task. Some open source projects (inspired by AutoGPT) have attempted “AI developer agents” that read a Git repo and create PRs, but these are experimental and require a lot of hand-holding. In contrast, Jules is a polished, integrated solution out-of-the-box.

- Google's own Cloud Ecosystem: It’s worth noting Jules is part of Google’s broader developer AI push. Alongside Jules, Google has Gemini Code Assist (an AI code completion and chat tool in Google Cloud) and AI features in Firebase and Android Studio. For example, Firebase’s AI helper can generate app code and has a UI for non-coders, and Android Studio might integrate Gemini for code suggestions. So Google is addressing AI coding from multiple angles. Jules is the specialized tool for repository-level autonomous coding. If you’re deep into Google’s cloud/dev ecosystem, Jules might integrate more smoothly with those (e.g., imagine linking Jules with Cloud Build or Colab notebooks). Meanwhile, Microsoft will integrate Copilot deeply with Azure DevOps/GitHub Actions, etc. So, part of the choice might come down to which ecosystem you’re in.

- Jules vs. Engine: Engine, unlike Jules, is designed as a fully autonomous software engineer that operates continuously - monitoring workflow tools to pick up tickets and ship pull requests without being prompted, as well as accepting ad-hoc tasks. While Jules executes scoped tasks on demand in a sandboxed environment, Engine behaves more like an always-on teammate that proactively contributes to projects. Jules excels at safe, auditable execution with strong developer-in-the-loop control; Engine trades some of that precision for greater autonomy. For teams seeking high-trust, agent-driven automation across the stack, Engine pushes further into autonomy than Jules’s current task-based model. Engine is also a more flexible configurable option that may appeal to power users.

In summary, Jules is among a new generation of coding assistants – those that are more autonomous agents rather than just autocomplete. It compares favorably with the early versions of similar agents from OpenAI and Microsoft, especially in its comprehensive approach to planning and its Google-grade infrastructure backing. But it’s also entering a fast-moving race. Developers could benefit from trying multiple options: for instance, using ChatGPT/GPT-4 for brainstorming or algorithm help, Copilot for on-the-fly coding in the IDE, and Jules for larger-scale codebase tasks. Each has its strengths. If Jules continues to evolve quickly (and if Google’s claims of a quarter of Google’s own new code being AI-generated hold true), it could become a dominant tool – especially once out of beta and possibly integrated into common IDEs or cloud platforms.

Performance and Impact on Developer Productivity

It’s natural to ask: how much does Jules actually help in real-world development? The answer, so far, seems cautiously optimistic. Google’s internal metrics and anecdotal community feedback both suggest a productivity boost, with caveats.

On the quantitative side, there isn’t yet a public, definitive benchmark comparing Jules to human performance across many scenarios. However, some data points are telling:

- Internal Usage at Google: Sundar Pichai (Google’s CEO) stated that by late 2024, over 25% of new code at Google was already being generated by AI. Jules is part of that AI toolset (alongside other codegens). This indicates significant adoption internally. Moreover, Google wouldn’t deploy such tools internally if they didn’t see quality results – Google’s codebase is massive and mission-critical, so an AI contributing a quarter of new code implies trust that the productivity gains outweigh the overhead of fixing AI mistakes. It’s likely that Jules (or its prototypes) were used internally on Google’s own repos, boosting engineers’ output especially on tests and boilerplate.

- Benchmarks: Earlier, we mentioned SWE-bench (Verified) where a Gemini-driven agent hit 51.8% task completion. This suggests that on a standardized set of programming tasks that mimic real bug fixes or features, the AI could finish about half of them correctly with minimal human help. That’s a promising baseline, though not yet superhuman. Each iteration of the model and tooling will improve this. We might anticipate that Gemini 2.5 Pro + Jules could score even higher on such benchmarks now, given improvements and the ability to use tools (e.g., run tests to verify correctness). It will be interesting to see future research or open challenges where Jules or similar agents are pitted against each other.

- Community Experiences: The developer community’s experiences with Jules in its beta provide qualitative evidence. Many have expressed amazement at seeing an AI agent create a non-trivial PR while they were “hands-off.” One user on Twitter shared that Jules was able to analyze their entire project and generate a suite of unit tests, something that would have taken significant time otherwise. Others noted successful bug fixes implemented by Jules with minimal prompting. There’s a reason people hype it as a “Codex killer” – it feels like a leap in capability. On the flip side, some also shared when Jules struggled (for example, misinterpreting an ambiguous feature request, or not knowing about an internal proprietary library). These cases highlight that productivity gains are not uniform; they depend on how well the task fits Jules’s strengths.

- Time Savings: For tasks Jules handles well, the time saved can be substantial. Writing tests is a great example: developers often begrudge the time writing exhaustive tests for legacy code. Jules can draft those quickly, and the developer just tweaks or verifies them. A task that might take a human 2 hours could be done by Jules in, say, 15 minutes plus another 15 to review and merge – effectively halving the effort or better. Multiply that across many tasks, and you see why Google’s developers might get a notable productivity boost. That said, for a totally new feature with lots of design decisions, Jules might not save much time because you have to carefully steer it, and you might end up rewriting parts. It shines more in the well-defined tasks realm.

One area to watch is code quality and maintainability of Jules’s output. Productivity isn’t just about speed, but also whether the code is good (otherwise you pay the cost later in debugging or refactoring). If Jules writes a feature fast but it’s buggy or hard to maintain, the short-term gain is lost later. So far, the design of Jules – requiring tests to pass, letting the dev review diff, etc. – is aimed at ensuring quality. Jules’s code is only merged when a human okays it, so ideally only good code gets in. As these agents improve, we might even measure their contributions in terms of defect rate or code review acceptance rate. For example, perhaps 80% of Jules’s PRs get approved with minor fixes, whereas an average junior developer’s first PRs might require more changes – that would be an interesting metric of effectiveness.

From a team process perspective, Jules could shorten development iterations. Imagine a weekly sprint where mundane tasks are all done by mid-week via Jules, freeing humans to focus on innovative work. It could also reduce backlog by addressing trivial issues continuously. Another impact is on onboarding new developers: ironically, an AI like Jules could handle the simple tasks a new hire might get, meaning human new hires can jump to more meaningful work (or conversely, maybe Jules is the new “junior dev” and human hires need to focus on higher-skilled roles).

One must also factor in the learning curve and overhead of using Jules. Developers have to learn how to prompt effectively, how to set up their project for Jules, and how to interpret its plans. This is a new skill (AI assistant literacy) that takes some time. In early adoption, this learning overhead slightly dampens immediate productivity gains. Over weeks of use, however, developers typically get much better at working with the AI. They start to recognize which tasks to delegate and how to phrase requests. So if a team just tries Jules for one day, they might not see huge gains, but over a month, they could start seeing major efficiency improvements as they and the AI “learn” to work together.

Wish your agent's VM configured itself? Try Engine's automatic set-up agent that configures Engine's remote workspace in one click.

Finally, consider the cost angle: during beta Jules is free with limited usage, but later it will likely be a paid service or require Google Cloud credits. The productivity gains will have to be worth the cost. If Jules can save an engineer a few hours of work each week, that easily justifies a reasonable subscription fee in an enterprise context. But if it’s used only sparingly, companies might be hesitant to pay much for it. Google hasn’t announced pricing yet, so it remains to be seen how they’ll value it. For now, developers can experiment with the beta to gauge the value for themselves.

Real-World Developer Experience

What is it like for a developer to actually use Jules day-to-day? Let’s paint a picture of the experience, combining the UI workflow with a critical view of how it fits into development practices:

When you first try Jules, you start at the web interface (jules.google.com). After connecting your GitHub, you’re presented with a dashboard where you select a repository and branch to work on. The interface has a prompt box – this is where you describe your task. Using natural language, you might type something like: “This project is on an old version of Node. Upgrade it to the latest LTS (Node 18) and ensure it compiles and all tests pass.” Then you hit a button labeled “Give me a plan.”

Jules takes a minute to clone the repo and set up the VM. You might see a spinner indicating it’s preparing the environment. Then, the plan appears: a formatted list of steps. In our example, it could be:

- Update Node.js to v18 – Jules says: “Use nvm to install and switch to Node v18. Update the

enginesfield in package.json to reflect the new Node version.” - Install Yarn (if the project uses it) – maybe Jules deduces from the project files that Yarn is used, so it plans to install it in the VM.

- Install project dependencies – run

yarn install. - Compile/Build the project – ensure it builds on Node 18.

- Run tests – execute the test suite to verify everything works.

- Submit changes – prepare a commit updating Node version and any lockfiles.

Seeing this plan, you’re impressed – Jules identified all the sub-tasks you’d do manually. You notice a small mistake: your project uses npm, not yarn. No problem – there’s a chat box where you can give feedback. You type: “Use npm instead of yarn; this project doesn’t use Yarn.” Jules responds quickly with an updated plan: Step 2 changes to “Install project dependencies (using npm)” and it adjusts subsequent steps accordingly. Satisfied, you click Approve Plan.

Now Jules is off to the races. You switch to the Activity Feed tab. Line by line, it logs actions:

- Cloning repo… (you see a confirmation when done)

- Using nvm to install Node v18… (perhaps it shows console output of downloading Node)

- Updated package.json engines field (from 16 -> 18) – and you see a diff snippet inline showing that change.

- Running npm install… – if it encounters an error (say a package needed Node 16), it might log a warning.

- Compiling project… – if your project has a build command, it runs it.

- Running tests… – you see test results output, suppose all tests passed.

If a test failed, Jules would log it and might even try to fix. Let’s say one test expected Node 16-specific behavior. Jules might pause and ask you via the chat: “Test X failed because of a deprecation in Node 18. Should I apply a known fix for it?” – giving you context. You can then discuss or instruct it on the spot.

In our scenario, assume tests passed. Jules then finalizes its work. It shows a Task Summary: Files changed: package.json, package-lock.json. Total runtime: 2m 45s. Lines changed: +2/-1. It even suggests a commit message: “chore: upgrade Node.js to v18 and update dependencies.” At this point, you can review the Code Diff in full. The right-hand pane lets you open each modified file and see all changes Jules made. Everything looks good – the engines field updated, lockfile updated, maybe a minor tweak somewhere for compatibility.

With a click on “Create branch”, Jules pushes the changes to GitHub on a new branch named (for example) jules-node18-upgrade. You go to your repository and open a Pull Request from that branch. Jules’s commit is there, with Jules listed as the author (perhaps with a Google Labs bot avatar). The PR description might include the summary of changes that you saw, which you (as the PR author) can edit or augment. You add reviewers from your team.

During code review, your colleagues see that Jules did the heavy lifting. Maybe one reviewer notes “We should also update the CI Node version.” That’s outside Jules’s knowledge (it didn’t touch the CI config). You can choose to either manually make that change or interestingly, you could ask Jules in chat to do it: “Also update the CI workflow to use Node 18.” Jules can then create an additional commit if it has access. This flexibility highlights how Jules can be used iteratively – you’re effectively pair-programming with an AI that can take larger initiative.

Finally, the PR is approved and merged. The Node upgrade that might have sat in the backlog for weeks happened with minimal human toil.

This story illustrates the flow and feel: Jules is very much like delegating to a competent assistant. But it also shows the candid experience:

- You have to double-check things (ensuring Yarn vs npm).

- You handle any high-level decisions (e.g., what to do with a failing test that requires a product decision).

- Jules only does what you ask, so thinking of edge cases (like the CI config) is still on you.

For developers, using Jules can be both empowering and a bit unnerving at first. Empowering because you realize you can get a lot done by simply describing the outcome you want. Unnerving because you have less “hands-on keyboard” control – you must trust the AI to some extent and verify after. There’s a psychological adjustment: trusting an AI agent to write code while you watch or do something else. Many developers have reported a sense of “wow, the future of work” seeing Jules handle a task fully, as well as a realization that their role might gradually shift more towards supervising AI outputs and doing the creative integration work.

Teams adopting Jules will likely develop new conventions. For example, code reviews might include a checklist item: “Was this PR AI-generated? If so, double-check for any corner-case issues.” There might be discussions on what tasks are appropriate to delegate to Jules versus what should be done manually. Over time, as confidence grows, those lines may move. Maybe today you’d trust Jules with test writing but not with a critical algorithm design; next year you might trust it with more as it improves.

One practical consideration is training and culture. Developers need to learn how to write effective prompts for Jules and interpret its output. This might become a sought-after skill (“Jules-driven development” technique). It also raises interesting questions about attribution and learning: new developers often learn by doing those grunt tasks themselves – if Jules takes them over, juniors might need other ways to gain that foundational experience. It’s analogous to how calculators changed how students learn math; you still need to understand the problem, even if you don’t do every step by hand.

Conclusion: A Candid Take on Jules for Engineers

Google’s Jules AI agent is an exciting leap toward more autonomous software development. For professional engineers and developers, it offers a tantalizing boost in productivity by automating the tedious parts of coding. Its core idea – “let the AI handle the boring stuff while you focus on what actually matters” – is one that resonates with anyone who has slogged through writing boilerplate or fixing mundane bugs. Jules shows that AI can move beyond being just a coding assistant to being a semi-autonomous agent operating within our development workflows.

In our deep review, we’ve seen that Jules AI brings robust capabilities: it can fix bugs, write tests, update code across files, and do it all in an asynchronous, plan-driven manner. It leverages the power of Google’s Gemini models and integrates beautifully with GitHub, offering a developer experience that is surprisingly smooth for such a futuristic concept. Innovative touches like plan transparency, interactive feedback, and audio summaries indicate that Google has put serious thought into making the agent practical and developer-friendly.

However, we’ve also peeled back the layers on its limitations. Jules is not a magic wand that instantly turns ideas into perfect code. It’s a helper with its own learning curve and quirks. It excels at clearly defined tasks, but less so at ambiguous ones. It can draft code in seconds, but you may spend time reviewing and tweaking that code. In critical terms, Jules currently feels like an extremely capable junior developer on the team: one who can tirelessly generate solutions 24/7, but still needs a senior engineer’s oversight to ensure those solutions are correct and optimal. That’s not a knock against it – in fact, that paradigm can already save teams significant time and allow the humans to concentrate on higher-level problems.

For those wondering “Should I use Jules in my workflow?” – the answer depends on your context. If you’re a developer who often finds yourself doing repetitive coding tasks, managing a large codebase with lots of mechanical upkeep, or maintaining legacy systems that need incremental improvements, Jules could be a godsend. It will handle the grunt work and free your schedule for creative development. On the other hand, if your day-to-day is highly experimental, algorithmically complex coding where every line is novel, Jules might have less to latch onto – you’d still be breaking new ground that AI can’t yet navigate without close guidance.

Comparatively, in the AI coding tool landscape, Jules stands out for now. It’s part of a vanguard along with Microsoft’s upcoming Copilot enhancements and OpenAI’s Codex agent. All of these are pushing toward a future where “apps are described into existence” as Google’s Josh Woodward put it. The idea that a developer’s job could shift from writing detailed code to supervising AI-written code is profound. Jules is one of the first real implementations of that idea aimed at everyday developers (not just AI researchers). In that sense, it’s a milestone. It shows what’s possible when you connect a powerful LLM to the full toolchain of software development.

That said, Jules is not yet widely available in stable form – it’s in beta (free to try as of 2025) with certain restrictions. It will be interesting to watch how Google iterates on it. Will it expand language support beyond the initial set? How will they price it? Will Jules be integrated into Google’s Cloud IDEs or remain a separate web app? And importantly, how will developers adapt their practices to make the most of such agents?

In conclusion, Google Jules AI is technically impressive and holds a lot of promise. It can certainly be useful right now in real-world coding and engineering contexts, especially for automating well-scoped tasks. Its innovative features like asynchronous operation and multi-step planning give it an edge in the current generation of tools. But using Jules effectively requires a thoughtful approach: leveraging its strengths (speed, thoroughness, lack of boredom) while compensating for its weaknesses (judgment, nuance, creativity). Developers who take the time to integrate Jules into their workflow, and treat it as a partner, are likely to find that it pays back in productivity what you invest in oversight. The candid truth is, Jules won’t replace you – but it might just make you a more efficient and happier coder by taking the drudgery off your plate.

As the AI coder landscape evolves, Jules is a sign of what’s to come: not just smarter code suggestions, but actual AI participants in our software projects. It’s an exciting time to be a developer, witnessing these tools mature. Jules isn’t perfect (and one should remain critical of its outputs), but it’s a big stride toward the long-term vision of AI-augmented software engineering – where humans and AI agents collaborate to build better software faster. For now, engineers can experiment with Jules and get a taste of that future, all while keeping their critical eye and coding standards sharp. After all, in software development, automation is welcome, but accountability remains key – and Jules, for all its autonomy, smartly keeps the developer in the driver’s seat.

Engine - a model agnostic alternative to Jules

Engine runs on all the latest LLMs and is built with flexibility to enable power users to get the most out of AI powered software engineering. Engine connects to all major workflow tools to work alongside you - Linear, Jira, Trello, GitHub, GitLab and more.

Engine is available now for solo developers and teams.