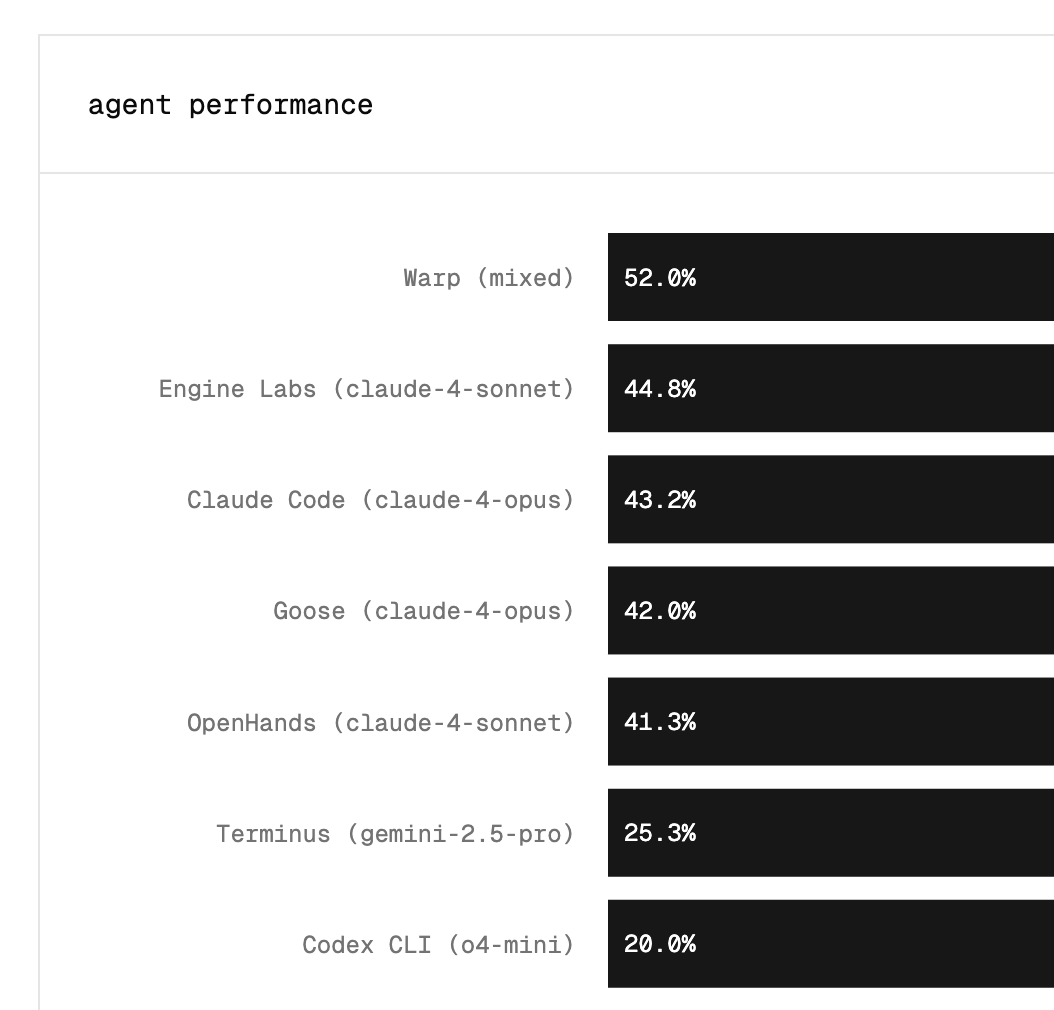

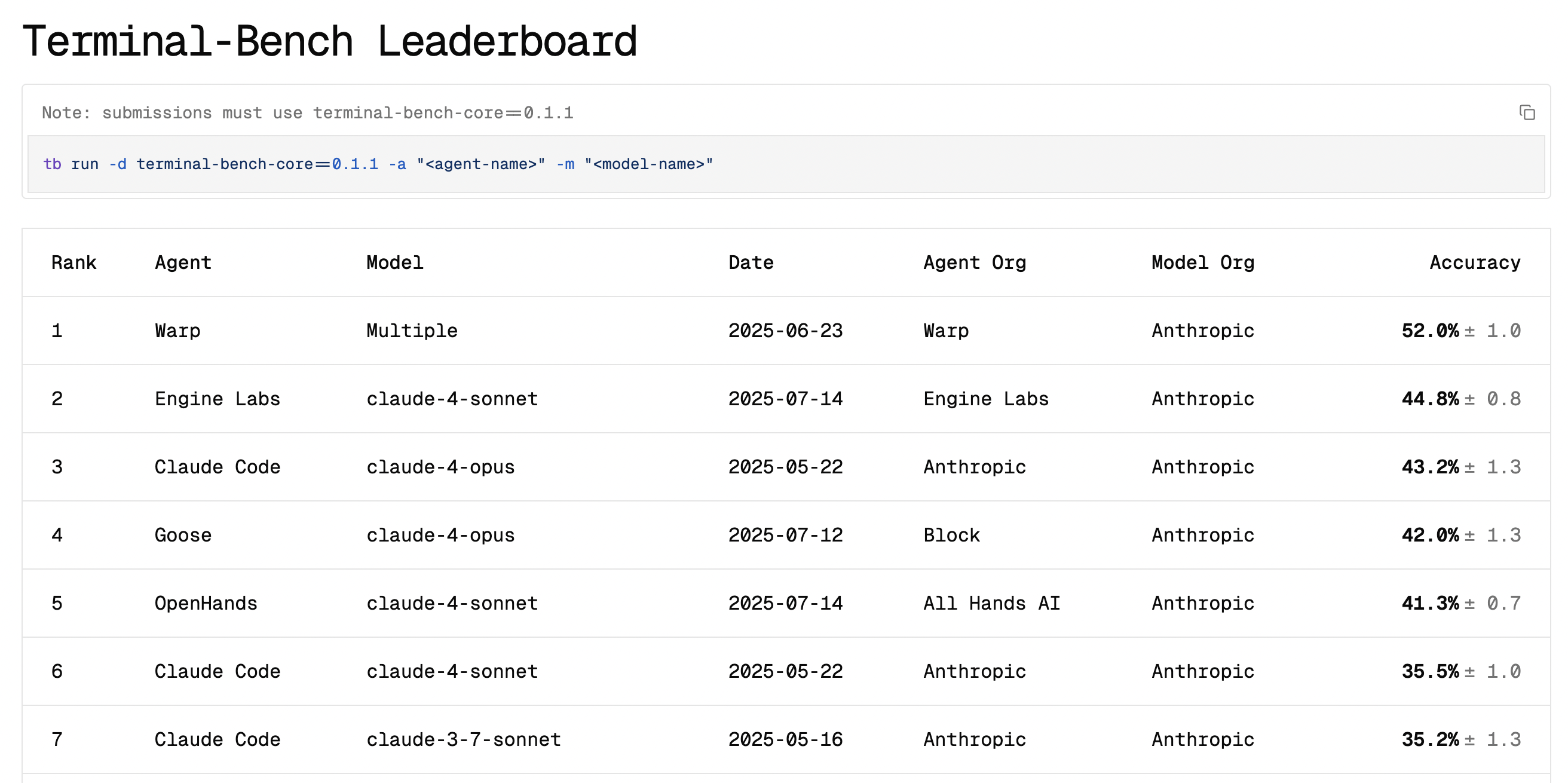

Engine Labs has posted a state of the art score on Terminal Bench - the top score with a Claude Sonnet 4 class model and #2 overall. This improves on Claude Code's equivalent score by ~25%, or around 10 percentage points and is a huge achievement for Engine's small but experienced technical team.

LLM terminal use has emerged as an important competency for remote coding agents and is something Engine Labs has been researching for over 18 months. Here we provide some detail on our approaches to advance the state of the art.

Background

It’s table stakes now to give background coding agents a way to run Bash commands: OpenAI Codex does, Google Jules does, Devin does, etc.

It might be reasonable to ask, then, if there’s a standard implementation of a Bash tool for agents. In fact, there are a few! OpenAI and Anthropic have helpfully provided some reference implementations which broadly achieve the goal but differ in one important aspect. Anthropic’s example creates a persistent Bash session for the LLM to interact with, whereas OpenAI’s example runs an LLM-generated Bash command directly as a child process.

As it turns out, we’ve found that one of these is better than the other as a tool for a background coding agent.

We put our current incarnation of our coding agent using Claude 4 Sonnet and its terminal tool through its paces with Terminal Bench where, as of time of writing, we’re second on the leaderboard, even beating a few agents which were using Claude 4 Opus!

What follows is a roughly chronological journey of the evolution of our agent’s terminal tool from the basic child-process implementation through to the tool that helped us score so highly on Terminal Bench.

Running commands as child processes

We write TypeScript at Engine Labs. The equivalent of Python’s subprocess module found in the reference implementations is Node’s child_process module. Our earliest iteration of a Bash tool did what OpenAI’s reference implementation does — it ran LLM-generated commands as child processes and returned command output.

import { exec } from "child_process";

import { promisify } from "util";

const execAsync = promisify(exec);

// error handling omitted for clarity

async function BashTool(command: string): Promise<string> {

const { stdout } = await execAsync(command);

return stdout;

}

This worked well for quite a long time. Switching to Bun as our runtime gave us a very slightly more ergonomic version of child_process.exec for free: Bun Shell (slightly ruined by the need to avoid escaping the command we were trying to run).

import { $ } from "bun";

// error handling omitted for clarity

async function BashTool(command: string): Promise<string> {

const output = await $`${{ raw: command }}`.text();

return output;

}

This lightweight implementation served us well until some edge cases started rearing their heads.

User interaction

Some commands ask for user input while they’re running. These will either hang or timeout, depending on the implementation of the child-process-based tool.

Solutions

- Set environment variables to indicate that this isn’t possible, e.g.

DEBIAN_FRONTEND=noninteractive. - Instruct the agent to avoid commands that require user input, or to pass flags to disable user input where possible (e.g.

sudo apt-get install -y ...).

REPLs

If the agent wants to start a REPL with something like python3 or node, this will not work at all since there is no persistent Bash session.

Solution

- Instruct our agent to write code to file with other tools and only run the file with the Bash tool

Other non-terminating commands

Alongside REPLs, there are other commands and programs that do not terminate without further user input. Most commonly this is webservers, though there were several instances of commands hanging forever waiting for a network response or similar.

Solution:

- implement a timeout mechanism

Timeouts helped enormously with reliability, but raised an additional issue: supporting arbitrarily long-running commands like long compilation steps or docker build was no longer possible. This left us having to pick a sensible timeout length and adjusting our agent system prompt and tool description accordingly

Switching to a persistent Bash session

After monitoring the usage of the child process Bash tool for a while, we eventually became dissatisfied with the extent to which the success of the tool was dependent on how well the agent followed instructions and how long a given command took to run.

So, we thought we’d try the persistent Bash session implementation to see if it was any better.

After a brief but painful attempt to implement our own terminal, we settled on using node-pty, the pseudo-terminal library behind VS Code’s terminal emulator.

However, we now had a new problem: how do we know if a command has finished running, or is waiting for user input? Unfortunately for us, this sounds almost like we’re asking if we can solve the halting problem!

“Solving” the halting problem

Though it perhaps seems like we were doomed from the beginning, we didn’t set out to handle every possible input, just most of the ones that our agents might run during the course of creating a PR. There were a number of tricks and heuristics that we thought we might be able to use to approximate a solution.

Many implementations (including Anthropic’s reference one) rely solely on a timeout to decide when the Bash session is accepting further user input. This works for short-running commands, but is not ideal for longer-running commands, as the tool will either error, or only return command output prior to the timeout.

Anthropic’s computer-use demo has a Bash tool implementation that offers one neat trick. This implementation appends an echo command after each LLM command that prints a sentinel value that can be searched for in the Bash tool output.

This works for commands that return the Bash prompt. This does not work if the command starts a REPL.

A brief diversion into strace

We tried briefly to channel our inner Linux wizards to see if we could somehow trace the system calls that the node-pty process was making to see if it was accepting user input before we attempted to send LLM-generated commands.

A combination of not being certain we’d caught all the ways that a process could be waiting for user input and trouble running sudo in the strace-d terminal process led us to abandon this method.

(We think that it’s probably possible to implement a solid strace-based solution, but at the time, it was causing more trouble than it was worth. Let us know if you reckon you can do it!)

Output stability

One of the core tricks we use to decide whether the Bash session is ready for more input is to watch for any change in output over a short window of time.

For example, short-running commands like ls will end quickly, returning command output and then displaying the Bash prompt. After some time, we deem the output to be “stable” and can return the output to the agent.

engine@10:~/project$ ls

CONTRIBUTING.md Dockerfile LICENSE README.md package-lock.json package.json server.js tests

engine@10:~/project$

For a long-running command, e.g. running the python3 REPL, the Bash session will display the Python REPL prompt and nothing else. Again, after some time, the output is deemed “stable” and we can return the output to the agent.

engine@10:~/project$ python3

Python 3.12.3 (main, Jun 18 2025, 17:59:45) [GCC 13.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>>

It may seem like that’s enough! But there are two more things to consider:

- How long exactly should we wait for output to stabilise?

- How much latency in the agent loop is tolerable?

Long-running quiet commands

For point 1 we need to consider what happens when a command deliberately has no output for a period of time, and is also long running. For example, docker build --quiet suppresses output until completion, then prints the image ID — so it looks ‘stable’ immediately but will actually run for a while.

What if we set the output stability timeout to something large, like 10 minutes? That will work, but that brings us to point 2: we’d then be waiting 10 minutes for every command that the agent wants to run, including things like ls.

It could be argued that a long-running quiet command is somewhat of an edge case! But in fact, in our agent sandboxes, which are underpowered compared to “normal” developer machines, this was a recurring issue - most frequently in build steps, linting and typechecking for large projects.

Conclusion

We haven’t mentioned handling control characters and arrow keys - we’ve found an implementation that works for our Bash tool but we won’t give it away here (It’s simpler than it sounds — we’re happy to compare notes if you’re curious!)

In general, we’ve found that the more robust a Bash tool is to agent inputs, the better the agent performs - so we have a few more tricks up our sleeves to deal with tricky edge cases.

Unfortunately we can’t say what these are for commercial reasons, but we can say that there are no subagents, or extra terminal tooling involved.

We’re pretty pleased with the success rate of the current version of our Bash tool - coming second on Terminal Bench to a company whose primary offering is an actual terminal is great, and we’ll only continue to improve our agent and its tools!